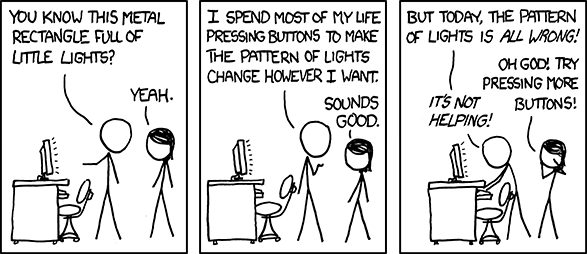

Why observability matters

Written by Devhouse Spindle on 9th October 2018

In the zone

You’re sitting behind your computer on a Friday afternoon, typing away on your Cherry MX Red keyboard that’s making the sound comparative of a herd of bison. You’re making sure everybody knows that you’re working on the ‘Next Big Thing'(TM) and nothing is going to stop you from finishing it before the weekend. But then just as you push your code to the master branch and let your CI/CD pipeline release the new version of your software, disaster strikes. You’re getting angry emails, phone calls and tweets from customers. What appears to be a mob of sysadmins with pitchforks and torches is forming outside your office window. You broke production. Now what?

Resurrecting your application

This, of course, is an exaggerated doom scenario but the point here is that stuff breaks. Just like a coroner does an autopsy after a patient has died, it is your job to get your hands into the innards of your application and find out the cause of death. That’s where the analogy stops working as I’ve yet to hear of any coroner bringing a patient back to life after determining a cause of death. But that is what you’re going to do. With the wrath of Linus Torvalds himself, we will Dr. Frankenstein this thing back to life so people can share their cute cat videos with each other again!

What is observability?

But how do we get to a point where we’re not just messing around in the corpse like a kid in a bathtub? It all starts with creating observability for your application. Observability is defined by Wikipedia as:

“A measure of how well internal states of a system can be inferred from knowledge of its external outputs.”

To get back to our morbid example, if the patient had medical records showing the heart rate, blood pressure and brain activity at the time of death it would have better observability than just being handed a corpse without any information.

Prove it using data

Increasing observability allows us to look at our application and clearly see what happened to it without having to guess. This is why we need data to back up our hypotheses and get a complete image of what happened. This data can come from many sources but the most important ones that I’ll be addressing in this post are logs and metrics. Knowing when to use which is important in determining a cause of death. So get out your latex gloves and put your face shield on because we’re going down into the very bowels of our application to find out where our assumptions went awry.

Metrics

Metrics are the raw crude oil that you can extract from your application. In essence, it’s nothing more than a value at a point in time. For example, you could measure the response time of your application by probing your website every 15 seconds and saving the results to a database.

Counters and gauges

The two most used metric types are counters and gauges. A counter, as the name implies, simply counts the number of times an event has happened. For example, the amount of times someone visits your website could be a counter. Note that a counter can only increase in value unless it is reset.

A gauge on the other hand, as you might’ve guessed, gauges something to find out the current value. A good example would be memory usage. Memory usage can both increase and decrease depending on the situation.

Gaining insights through metrics

In the end, by analyzing these metrics you can ask general questions about your system that matter to you. Like, how many hits did we get on the website after we published that new press release? Or, are our servers CPU’s still fast enough to handle traffic spikes?

In most modern monitoring systems these metrics are either actively pushed from inside the application/server to a central location (statsd, zabbix) or are passively scraped on an interval (Prometheus). What type of monitoring system you should use depends on the needs of your system but that’s a whole can of worms I’ll leave for someone else to open.

Time Series Databases

After the metrics are received they are then stored in a database. Most monitoring systems use a database type called a ‘Time Series Databases’ or TSDB for short. These types of databases are different from standard SQL databases in the sense that they are purposely built for working with time series data instead of relational data. They provide powerful query capabilities that let the user ask things like “How much did our memory usage increase over the last hour?” or “How many people visited our website per second in the last week?”.

Sometimes a metric can instantly tell you what went wrong in a system and other times you need to combine a few metrics to get a clear image of what’s going on. A great example of something that’s instantly visible in your metrics is when your application runs out of memory and dies. The dreaded out-of-memory killer paid your application a visit and murdered it. If you can look at your metrics and, over a period of time, see the available amount of memory nearing zero it doesn’t take a genius to figure out what happened.

Logs

Hate them or love them, logs are an essential part of any well functioning application stack. Logs can tell you exactly what happened at what time which is invaluable information when trying to figure out a cause of death.

However, logs differ from metrics in the sense that logs allow you to drill deep into an issue. It allows you to see exactly what the system was doing during a certain period of time. What code path was being taken, what request headers were being sent, which errors occurred or even whole stack traces can be found in logs. Metrics, on the other hand, give you a general feel of how your application functions but don’t tell you exactly what happened in your application.

Log centralization

It’s very important to have all your logs in a centralized location. You don’t want to hop from server to server looking through hundreds of individual log files when everything’s on fire. Some good options to consider for this are the ELK stack (ElasticSearch, Logstash, Kibana) or Graylog. Both of these allow you to search through and visualize large amounts of logs.

Creating insights out of logs & metrics

Now you’re gathering metrics about your application and they’re sitting there all snug in their fancy TSDB. But we want to visualize the metrics to make the process of finding out what’s wrong easier and repeatable. This is where dashboards come into play. Dashboards allow you to take a metric and display it in a graphical format (graphs, tables, heatmaps, etc.). Dashboards allow you to visualize what’s going on inside your application and when done right make it possible to spot issues before they even happen. I’m a big fan of Grafana for creating my dashboards.

You can see the metrics as raw crude oil, great to have lots of it but unusable until you process it in some way. By creating dashboards you process your metrics into knowledge about your application. And armed with that knowledge you can take action to solve issues. This sounds complicated but is easier than it sounds so bear with me.

An example

Let’s take the metric for memory usage as an example. In its raw metric form, it’s not very usable. But when we turn it into a dashboard that shows you the amount of available memory over time it all of the sudden becomes knowledge that you have about your system. You can look at the dashboard and clearly see that the memory usage is constantly increasing until eventually your application is killed.

With that knowledge, the next step you then need to make is to figure out why your application is eating up so much memory. And that’s where the logs come in. This allows you to deep dive into this unwanted behavior by looking at what’s happening at the exact moment your application crashed.

If you have more data, use it! The more clear you can paint a picture the closer you’ll get to identifying the cause of death.

What’s next?

There’s more you can do to increase observability than gathering metrics and logs. But if you have these two sources of information you can debug the majority of issues that crop up. If you have these two fronts covered you’ll hopefully feel more confident in tackling production issues when they occur.

But don’t think you’re off the hook yet! Oh no, there’s so much more you can do to improve observability and your monitoring practices. So in a future blog post, I’ll go into more details on how we handle monitoring here at Spindle and will touch upon the subject of alerting, Prometheus and exporters.

Your thoughts

No comments so far